Introduction

In the ever-evolving cybersecurity landscape, Cloudflare’s recently disclosed breach marks another high-profile vulnerability exploitation, challenging the expectation that tech giants, with their vast resources, are less susceptible to such lapses. This sophisticated nation-state level attack exploited preventable vulnerabilities, echoing the recent MidnightBlizzard compromise of Microsoft and highlighting a series of oversight issues within Cloudflare.

TL/DR Summary:

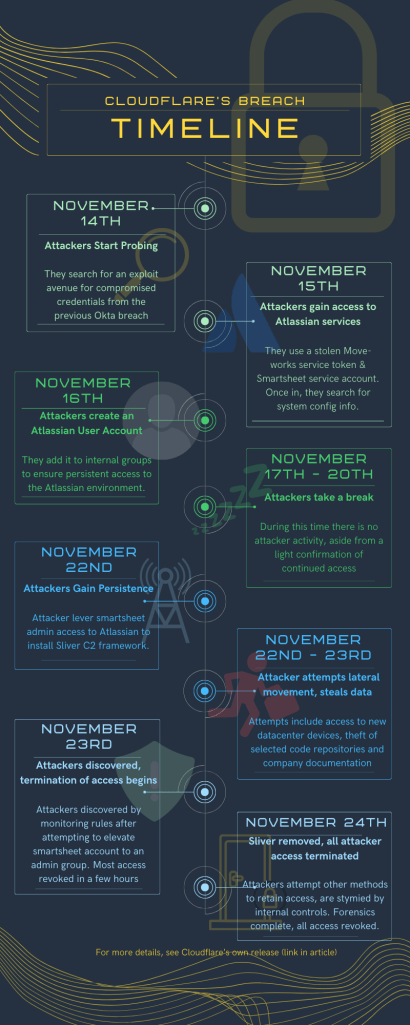

- Incident Overview: A sophisticated nation-state-level threat actor compromised Cloudflare in late November 2023 through credentials stolen in October’s Okta breach.

- Attack Details: The attackers aimed to establish long-term network presence but were evicted by Cloudflare’s rapid response, though not before they stole significant internal documentation and code repositories.

- Response and Cleanup: Cloudflare’s laudable extensive post-mortem cleanup involved a wide-scale security overhaul and significant resources, demonstrating a serious approach to incident response.

- Of interest: Though Cloudflare notes no customer impact, access to stolen source code could enable attackers to sniff out vulnerabilities Cloudflare themselves have not yet discovered for future exploitation.

The Incident Unpacked

The attack, detailed by Cloudflare, leveraged compromised credentials from the September Okta Hack, which Cloudflare did not rotate, under the mistaken belief they were unused. Before being discovered and kicked out of Cloudflare’s network, attackers pilfered large amounts of company documentation from various content services, as well as dozens of source code repositories related to a variety of infrastructure-centric topics.

Although attackers attempted to leverage initial access and other vulnerabilities to establish long-term presence and move laterally to other systems, their ability to do so was greatly limited by existing Cloudflare internal security practices, per an analysis by Cloudflare & Crowdstrike.

Both during the breach and in its immediate aftermath, Cloudflare’s handling of the incident showcased both strengths and weaknesses.

Cloudflare’s Response: Hits and Misses

The Good…

At the top level, Cloudflare’s response to the incident and follow up was strong.

- The company’s rapid engagement with a skilled incident response partner like Crowdstrike showed a solid Incident Response plan and execution.

- Mobilization of significant resources for clean-up activities reflects a strong management commitment to addressing surfaced issues.

- The cleanup activities themselves were good, including rotation of many internal credentials, scouring code repositories for potentially exposed secrets, generally defaulting to resetting or sunsetting any asset even remotely suspected of compromise. This reflected a thorough post mortem and action plan.

Perhaps most importantly, given that the attacker’s likely objective (per a number of external analyses) was to establish long-term presence and access to Cloudflare’s networks for use in future attacks – the primary attack objective was stymied (despite ancillary data theft)… at least for now.

…The Bad …

However, this incident was a clear miss in preemptive security measures.

- Sunsetting unused assets either wasn’t happening or wasn’t happening consistently or rapidly enough to prevent some of them being used for initial access in the breach.

- Decision-making around earlier incidents seems inconsistent or illogical – Cloudflare chose not to rotate some passwords compromised in last fall’s Okta breach as they were confident they weren’t in use – but did not decommission them, which would have been the logical next step for assets believed to be unused.

Image: vaeenma

…The … Questionable…

A few points of the cleanup raise questions as to whether they reflect bridging material gaps or a “Double-do” of an already healthy starting place.

- Cleanup included “physically segment test and staging systems” – which is either plugging a large hole or going above and beyond depending on what specifically was being segmented from what. Without insider perspective, it’s hard to tell.

- Checking for and removing secrets from documentation and source code should ideally already be a part of (S)SDLC practice. If this was being done for the first time in response to the incident, that would be concerning. However, if it was a reinforcement of existing practices, that would be commendable.

- Searching out and sunsetting unused assets (e.g. accounts, systems) is part of healthy config and asset management. Hopefully it was already happening before the breach.

… and The Ugly…

It’s also interesting to note that had Cloudflare treated their third-party breach (through Okta), more similarly to how they treated their internal breach (this one), the current breach likely would not have been possible in the first place.

The Bigger Picture: Lessons and Implications

This breach is a case study in the essential role of supply chain and vendor risk management, as well as the importance of strong oversight of assets. If this sounds repetitive, it’s because those same themes echo in other notable events – e.g. the Okta and MoveIT incidents, Microsoft’s recent compromise.

Collectively these incidents underscore the interconnectedness of threats in the landscape, and the cascading effects of a single vulnerability being exploited, particularly given the modern cloud-first, “…as a service” design philosophy.

At the same time, in most of those cases, diligent application of any one of several potential protections could have prevented or severely limited impact – highlighting the importance of a consistently applied and enforced layered defense.

Finally, with attackers having compromised some source code, there is always the long-tail risk that said code could be mined for further vulnerabilities to be exploited in future attacks – making an excellent point for tight controls around source storage, modification, and usage in any tech firm.

Conclusion: The Breach in Perspective

Image: Olivier26

Cloudflare’s recent breach underscores the continuous need for vigilance and comprehensive risk management across supply chain, asset, and configuration management. While their immediate response to the incident was commendable, we shouldn’t forget that this event seems to have been a very preventable outcome – no organization is immune to gaps in policy and practice. For security professionals, the goal is not to implement “THE” solution, but to continually refine layered defenses to reduce probability and impact as much as possible for the time when our organization is in the crosshairs…

How would yours fare if similarly tested?

Key Lessons: A Summary

- Third party risk management, both identification AND response, is key. Knowing exposure IS key, but an appropriate response, treating supply chain breaches similarly to internal ones, is also needed.

- Configuration and Asset Management done well eliminate many avenues of attack. Periodic review of assets and configurations, and sunsetting of those no longer needed, yields a tremendous reduction in attack surface. It isn’t glamorous, but it’s hugely valuable.

Leave a comment