Cover image: weerapat

Introduction

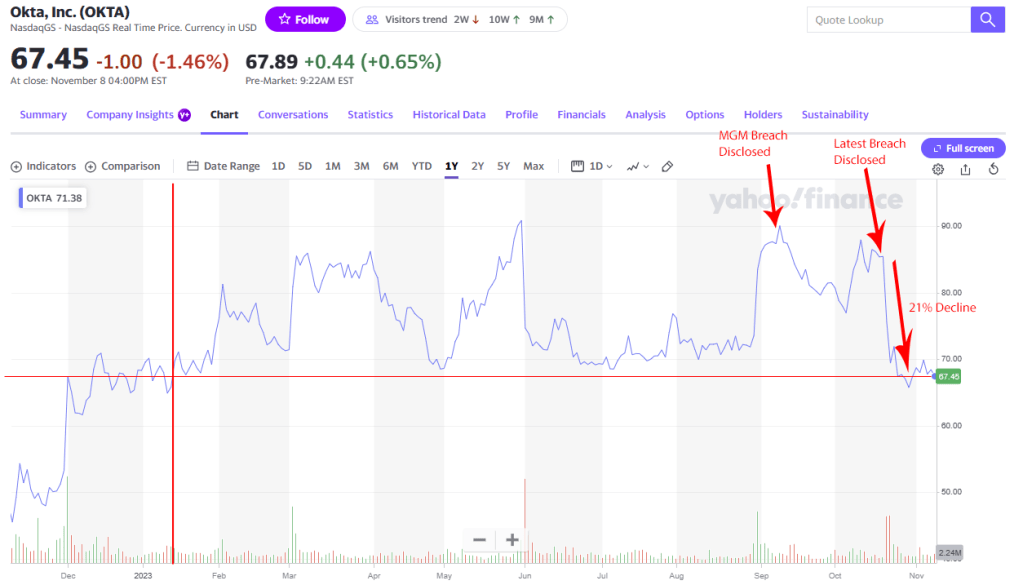

Okta has been in the news a lot over the last few months, and not for good reasons – ranging from being part of the attack chain in the MGM breach, a September attempt by threat actors to social-engineer their way past Okta’s multi-factor authentication, more recent third party compromises, and finally the latest compromise of customer data.

Okta has been a frequent target for Threat Actors, even going back years (ref: Mar. 2022 Lapsus$ hack, Dec 2022 source code theft, others before). They’ve been taking a beating, losing $2 billion in Market cap in late October alone, with the current price of ~$67.45 per share (Down 21% from highs near $85 in 3Q23) effectively having wiped out all 3rd quarter & October gains, coming to rest at the same price as mid-January.

Late last week Okta disclosed details of the latest breach, including the initial access method. The details are quite interesting and tell a cautionary tale that not even the largest and most trusted security organizations are immune to overconfidence. There are some key learnings from what they revealed…

TL/DR : Summary

- Okta was breached through a combination of poor endpoint controls and bad employee behavior.

- Attackers exploited gaps in detection rules and the lack of robust session controls to hijack customer sessions.

- Attackers had access for at least several weeks until Okta shut things down.

- Okta took 2 weeks to respond to customer inquiries and 3 to fully disclose the breach publically.

The Breach Breakdown

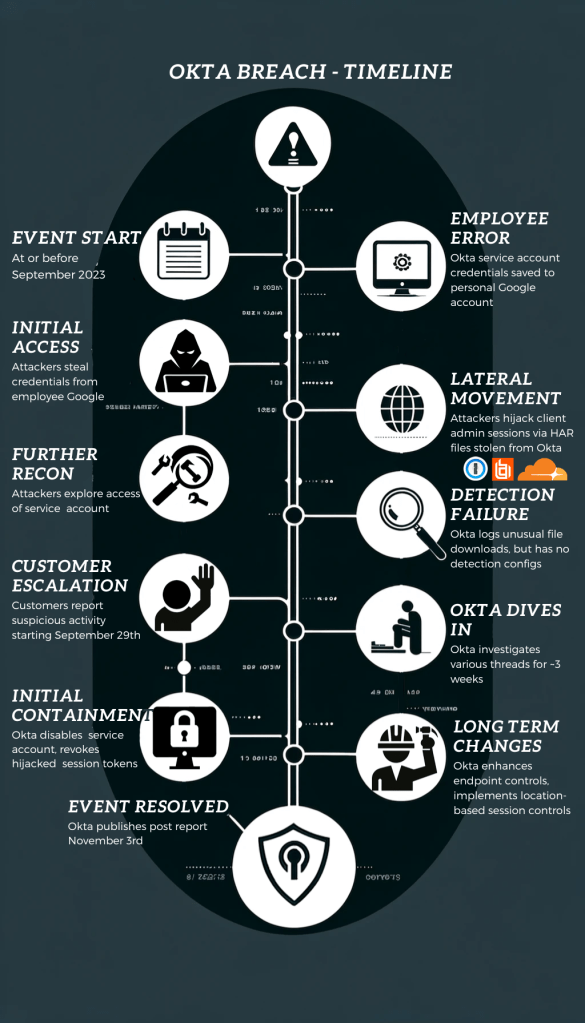

From September 28 to October 17, 2023, unauthorized access to Okta’s systems resulted in a breach affecting 134 customers, including cybersecurity stalwarts 1Password, BeyondTrust, and Cloudflare, reported Okta (and subsequently news sites).

Initial access: A leaky Endpoint

Okta CISO David Bradbury admitted in the report that 2 failures led to the initial compromise:

- An employee being able to access their personal Gmail account on a company laptop.

- Endpoint controls failing to prevent both this and the employee subsequently saving Okta service account credentials into their personal account.

Threat actors compromised the user account and levered the cached service account credentials to access the company’s internal support system. From there, they downloaded files associated with a number of client cases, including HAR files containing session tokens, which they levered to hijack customer Okta sessions.

Missed Cues

Okta admitted detecting nothing suspicious after initial threat actor access and activity, it was 14 days into their investigation before they found specific attack evidence. This was despite logs existing that could have potentially indicated the activity.

image: raywoo

The incident came to their attention via customer reporting over a span of 2 weeks, with a customer-reported suspicious IP leading Okta to eventually track down the specific activity.

Actions Post-Breach

In response, tactically, Okta revoked the session tokens used in the attack and disabled the compromised service account.

As a more permanent protection, they

- Pushed out a configuration barring personal account logins on company endpoints

- Deployed additional monitoring and detection rules to find similar malicious activity in their customer support system.

- Introduced location-based administrator session token binding as a security enhancement (though this feature needs to be enabled on the customer side).

Lessons and takeaways

The incident and its response highlighted a number of gaps, some embarrassing, some within the cone of reasonability:

- Embarrassing: Lack of endpoint controls: The absence of measures to prevent personal app access on corporate devices was a critical lapse. This is a fundamental security practice that Okta either overlooked or implemented poorly.

- Embarrassing: Lack of location-based session control or monitoring: The lack of controls, preventative or detective, for unusual session activity, was a real gap. This is double true for a scenario like admin sessions from new IP addresses. While session management can be complex, even common commercial services require confirmation or authentication when a connection from a new location is detected.

- May be reasonable: Failed monitoring: Though detectable actions taken by the attackers were logged, the lack of associated correlation rules in SIEM systems hindered early detection. However, it’s also crucial to acknowledge that detecting every threat isn’t feasible. Without knowing details of the specific logs involved in the case at hand, it’s hard to pass judgement as to whether this was a major drop.

- Embarrassing: Customer communication and breach notification timing: Okta’s two-week wait to acknowledge BeyondTrust’s initial report, and 3-week timeframe for public notification highlight a need for timely communication. Though it’s unlikely Okta has any legal liability given both jurisdictions and the fact there was < 72 hours from confirming a full set of impacted accounts and notifying them, this was still well short of “Excellent” response.

- image: rawpixel

- Potentially Embarrassing: Poor Security awareness / and or “Fair use” policy: It’s not clear from Okta’s statement if the employee saved credentials knowingly or by mistake. Regardless, it points to a need for either a more robust security training program, or a better (or better enforced) “Fair use” policy.

Conclusion

Okta’s most-recent security breach is a reminder that even the biggest and most trusted security companies can make mistakes, and sometimes large ones. Other organizations can and should learn from Okta’s mistakes and take steps to improve their organizational security posture. By implementing stronger endpoint security controls, enforcing strong application session management, improving monitoring and detection capabilities, and fostering a culture of security awareness and reporting, organizations can reduce their risk of becoming the next high-profile victim of a security breach.

Leave a comment